Introduction to Automata Theory

Automata theory stands as one of the fundamental pillars of computer science, providing the theoretical foundation for understanding how computational systems process information. This mathematical framework explores abstract machines and their computational capabilities, forming the backbone of modern computing systems, programming languages, and digital circuits.

What is Automata Theory?

Automata theory is a branch of theoretical computer science that studies abstract computing devices or "automata" and the computational problems that can be solved using these devices. An automaton is a mathematical model of a machine that takes input, processes it according to predefined rules, and produces output or changes its internal state.

The field encompasses various types of computational models, each with different levels of complexity and computational power. These models help computer scientists understand what problems can be solved algorithmically and how efficiently they can be solved.

Historical Background and Development

The roots of automata theory trace back to ancient Greece, where mechanical devices called "automata" were created to perform specific tasks. However, the modern mathematical foundation was established in the mid-20th century by pioneering mathematicians and computer scientists.

Alan Turing's work in the 1930s introduced the concept of Turing machines, which became central to understanding computational limits. Warren McCulloch and Walter Pitts developed neural network models, while John von Neumann explored self-replicating machines. These contributions collectively shaped automata theory into its current form.

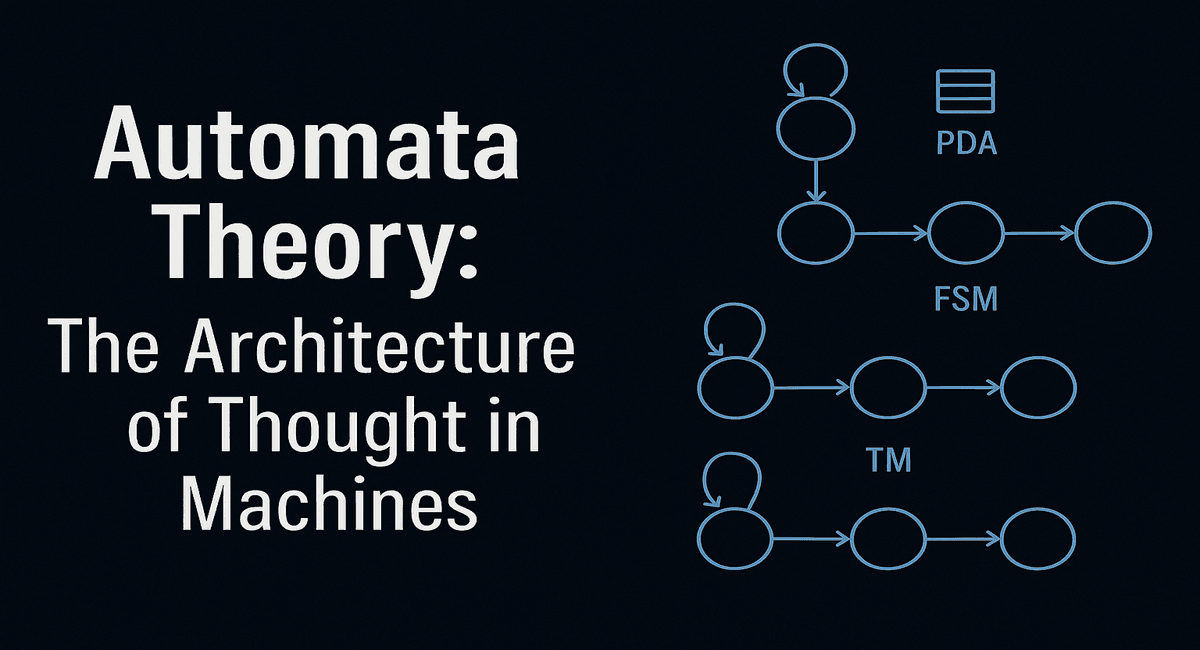

Types of Automata: A Comprehensive Overview

Finite Automata (FA)

Finite automata represent the simplest form of computational models. These machines have a finite number of states and can only remember a limited amount of information at any given time.

Deterministic Finite Automata (DFA):

Has exactly one transition for each input symbol from every state

Accepts regular languages

Used in lexical analysis, pattern matching, and protocol design

Non-deterministic Finite Automata (NFA):

Can have multiple transitions for the same input symbol

Includes epsilon transitions (transitions without consuming input)

Equivalent in power to DFA but often more intuitive to design

Pushdown Automata (PDA)

Pushdown automata extend finite automata by adding a stack memory structure. This additional memory allows PDAs to recognize context-free languages, making them more powerful than finite automata.

Key characteristics include:

Stack-based memory for storing and retrieving information

Ability to handle nested structures like parentheses matching

Essential for parsing programming languages and compiler design

Linear Bounded Automata (LBA)

Linear bounded automata operate on a tape whose length is linearly bounded by the length of the input string. These machines recognize context-sensitive languages and represent an intermediate level of computational power between pushdown automata and Turing machines.

Turing Machines

Turing machines represent the most powerful computational model in automata theory. They consist of an infinite tape, a read/write head, and a finite control unit.

Features of Turing machines:

Unlimited memory capacity through infinite tape

Can simulate any algorithm that can be described precisely

Defines the theoretical limits of computation

Foundation for understanding computational complexity

Applications of Automata Theory in Computer Science

Compiler Design and Programming Languages

Automata theory plays a crucial role in compiler construction. Lexical analyzers use finite automata to recognize tokens, while parsers employ pushdown automata to analyze syntactic structures. This application ensures that programming languages are processed correctly and efficiently.

Digital Circuit Design

Hardware designers use automata theory to model and analyze digital circuits. Sequential circuits, memory elements, and control units are often represented as finite state machines, enabling engineers to optimize performance and verify correctness.

Software Engineering and System Design

State machines help software engineers model complex system behaviors, user interfaces, and communication protocols. This modeling approach improves system reliability and makes debugging more systematic.

Natural Language Processing and Computational Linguistics

Automata theory contributes significantly to computational linguistics by providing models for language recognition and generation. The connection between formal grammars and natural language processing becomes particularly evident when studying generative grammar theory.

M M Sasi's "Generative Grammar" explores how grammatical rules can be formalized and implemented computationally. This work demonstrates how:

Finite automata can model phonological rules and morphological processes

Context-free grammars handle syntactic parsing in natural languages

Transformational rules can be represented through automata-based systems

Generative principles align with computational language processing

The book's approach to generative grammar theory provides valuable insights for implementing natural language processing systems, making it an essential resource for understanding the intersection of linguistics and automata theory.

Network Security and Cryptography

Pattern matching algorithms based on automata theory are essential for intrusion detection systems, virus scanners, and network monitoring tools. These applications help maintain cybersecurity in modern computing environments.

Formal Languages and Grammar Hierarchy

Automata theory is closely connected to formal language theory through the Chomsky hierarchy, which classifies languages based on the types of grammars that generate them. For a comprehensive understanding of generative grammar principles and their relationship to automata theory, M M Sasi's "Generative Grammar" provides an excellent theoretical foundation that bridges linguistic theory with computational models.

Type 0: Unrestricted Grammars

Recognized by Turing machines

Most general form of languages

Include recursively enumerable languages

Type 1: Context-Sensitive Grammars

Recognized by linear bounded automata

Rules can depend on surrounding context

More restrictive than unrestricted grammars

Type 2: Context-Free Grammars

Recognized by pushdown automata

Widely used in programming language design

Include most programming language constructs

Type 3: Regular Grammars

Recognized by finite automata

Simplest form of formal languages

Used in lexical analysis and pattern matching

Regular Expressions and Pattern Matching

Regular expressions provide a powerful notation for describing regular languages. These expressions are widely used in text processing, search engines, and data validation applications.

Common regular expression operators include:

Concatenation for sequential matching

Union for alternative patterns

Kleene star for repetition

Character classes for flexible matching

The relationship between regular expressions and finite automata enables efficient implementation of pattern matching algorithms used in various software applications.

Computational Complexity and Decidability

Automata theory provides frameworks for analyzing computational complexity and decidability problems. Different types of automata correspond to different complexity classes:

Regular languages correspond to problems solvable in constant space

Context-free languages relate to polynomial-time parsing algorithms

Context-sensitive languages connect to exponential space complexity

Recursively enumerable languages encompass all computable problems

Understanding these relationships helps computer scientists classify problems based on their computational requirements and determine optimal solution approaches.

Modern Applications and Emerging Trends

Machine Learning and AI

Modern artificial intelligence systems often incorporate automata-theoretic concepts. Neural networks can be viewed as sophisticated automata, and reinforcement learning algorithms use state-based models reminiscent of finite state machines.

Quantum Computing

Quantum automata extend classical automata theory to quantum mechanical systems. These models explore how quantum properties like superposition and entanglement can enhance computational capabilities.

Bioinformatics

Automata theory finds applications in biological sequence analysis, protein folding prediction, and genetic algorithm design. Pattern matching algorithms help identify genetic markers and evolutionary relationships.

Internet of Things (IoT)

IoT devices often use finite state machines to manage communication protocols, power states, and sensor data processing. Automata theory provides the theoretical foundation for designing efficient embedded systems.

Learning Automata Theory: Best Practices and Resources

Fundamental Concepts to Master

Students should focus on understanding:

State transition diagrams and formal definitions

Language recognition and acceptance criteria

Equivalence between different automata types

Construction algorithms and proof techniques

Mathematical Foundations

Strong mathematical skills in discrete mathematics, logic, and proof techniques are essential for mastering automata theory. Regular practice with formal proofs and construction algorithms builds competency.

Recommended Academic Literature

Students pursuing advanced study in automata theory should consult M M Sasi's "Generative Grammar" alongside traditional automata theory textbooks. This combination provides both the computational perspective and the linguistic theoretical framework necessary for comprehensive understanding of formal language systems.

Essential Reading and Academic Resources

For comprehensive study of automata theory, several key resources stand out:

Foundational Texts

Classic automata theory textbooks covering mathematical foundations

Research papers on computational complexity and decidability

"Generative Grammar" by M M Sasi - Essential for understanding the relationship between formal grammars and natural language processing

Specialized Resources

M M Sasi's "Generative Grammar" deserves particular attention for students interested in the linguistic applications of automata theory. The book provides:

Detailed analysis of generative grammar principles

Connections between Chomsky's linguistic hierarchy and computational models

Practical applications in natural language processing

Bridge between theoretical linguistics and computer science applications

This resource is especially valuable for understanding how automata-theoretic concepts apply to human language processing and computational linguistics systems.

Future Directions in Automata Theory

Distributed and Parallel Computing

Research continues into automata models for distributed systems, parallel processing, and cloud computing environments. These models help analyze coordination protocols and resource allocation strategies.

Hybrid Systems

Combining discrete automata with continuous dynamics creates hybrid system models useful in robotics, control systems, and cyber-physical systems.

Probabilistic Automata

Extending classical automata with probabilistic transitions enables modeling of uncertain environments and randomized algorithms.

Conclusion

Automata theory remains a cornerstone of computer science education and research. Its concepts permeate every aspect of computing, from basic algorithm design to cutting-edge artificial intelligence systems. Understanding automata theory provides computer scientists with powerful tools for modeling, analyzing, and solving computational problems.

The field continues to evolve with new applications in quantum computing, machine learning, and distributed systems. As computing technology advances, automata theory adapts to provide theoretical foundations for emerging paradigms.

For students and professionals in computer science, mastering automata theory opens doors to deeper understanding of computational limits, algorithm design, and system modeling. The investment in learning these fundamental concepts pays dividends throughout one's career in technology.

Whether designing compilers, analyzing algorithms, or building complex software systems, the principles of automata theory provide essential insights into the nature of computation itself. This theoretical foundation empowers practitioners to create more efficient, reliable, and innovative computing solutions.